Primary Tools

R & RStudio, RMarkdown, Shiny

Code.org (computer science education nonprofit)

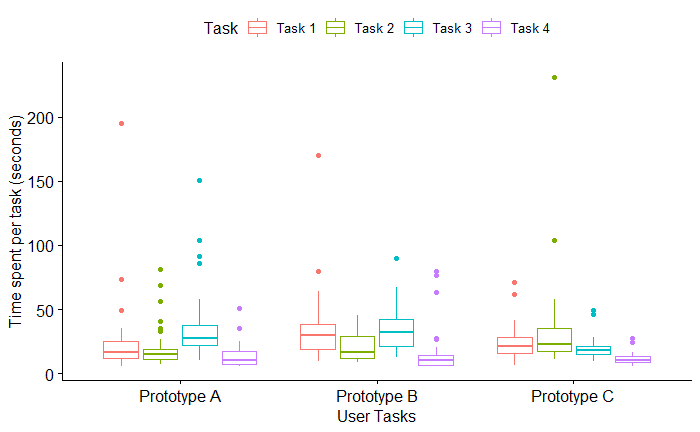

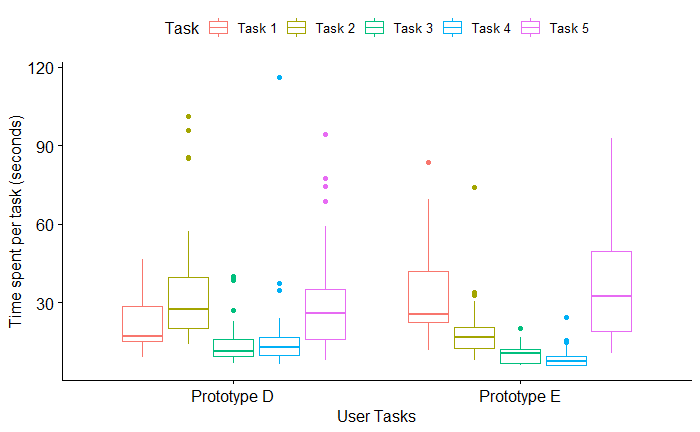

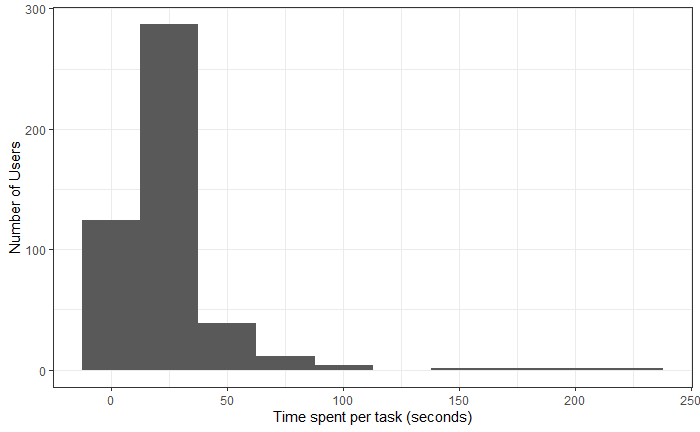

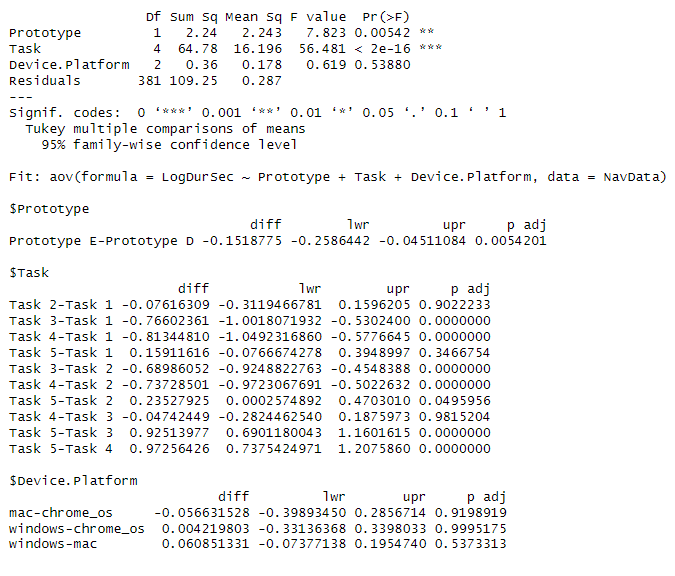

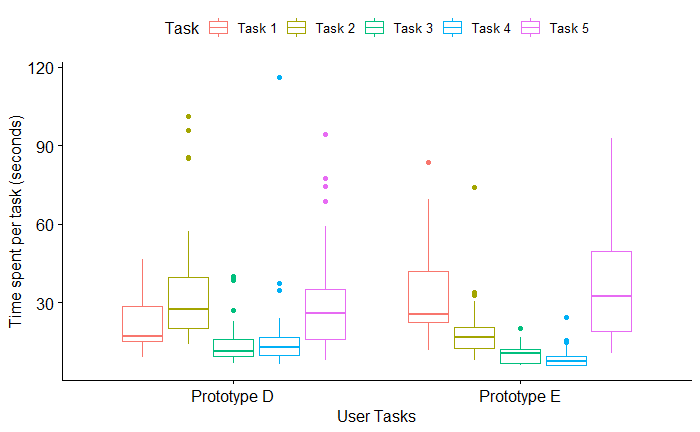

Measure user performance for specific tasks across multiple different navigation prototypes.

R & RStudio, RMarkdown, Shiny

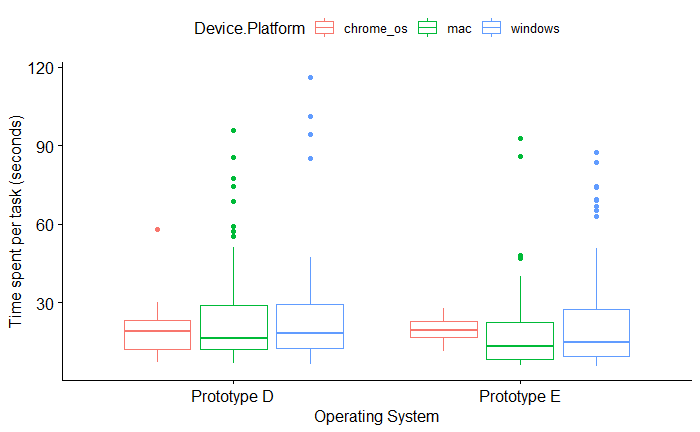

The client is redesigning their navigation to improve user performance across specific tasks. They have designed several navigation prototypes and conducted multiple rounds of user testing for each. The client needs insightful data analysis that converts data into actionable decision making.

Our team helped the client formulate and test hypotheses to compare user performance across prototypes. We produced a data report that identified which prototypes performed best. Our work revealed critical patterns across user operating system and screen size. We consulted on design modifications to maximize the client’s desired metrics while minimizing error rates.